This annotated bibliography is intended to accompany my CIG 2012 tutorial, but will hopefully be useful even if you didn't attend.

First cluster of systems: board games

A significant chunk of work, including the earliest, tackles board games. They have a nice formal structure, are discrete and turn-based, and there is already an established culture of inventing and analyzing game variants.

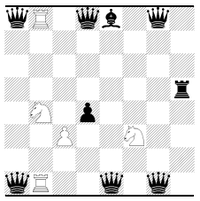

- Barney Pell (1992). METAGAME in symmetric chess-like games. In Heuristic Programming in Artificial Intelligence 3 – The Third Computer Olympiad, Ellis Horwood. Extended version appeared as University of Cambridge Computer Laboratory Technical Report UCAM-CL-TR-277.

Pell's METAGAME is, to my knowledge, the first published game generator. He defines a generative space of games more general than chess, which he calls "symmetric, chess-like games". They're encoded in a representation specific to this genre, which is also symmetric by construction. By symmetric I mean that mechanics are specified only from the perspective of one player, with the starting positions and rules that apply to the other player always being the mirror of the first player's. The rules themselves are represented in a game grammar, and generation is done by stochastically sampling from that grammar, along with some checks for basic game playability, and generative-parameter knobs to tweak some aspects of what's likely to be generated.

Pell's motivation was actually not game generation, but general game playing: by the early 1990s, there was a worry that chess-playing AI had delved too deeply into special-case code that was very specific to chess. Therefore, some researchers proposed that more fundamental advances in intelligence would be better served by forcing game-playing AI systems to tackle a wider space of games, where they couldn't hard-code as many details of a specific game, as they could if they only had to play chess. Therefore a way to define such a larger space of games was needed, which METAGAME aimed to provide.

- Vincent Hom & Joe Marks (2007). Automatic design of balanced board games. In Proceedings of the 3rd Artificial Intelligence and Interactive Digital Entertainment Conference, pp. 25-30.

Whereas METAGAME aims for rough balance by construction of its encoding, Hom & Marks propose explicitly checking for balance by simulated self-play. They define a significantly smaller space of game variation than METAGAME can handle, but optimize for balanced games by evaluating candidate games with a general-game-playing simulator, which plays some games against itself and computes a win rate. This simulation then serves as the fitness function for a genetic algorithm, which selects for games where there is little difference in win rates between the first and second player.

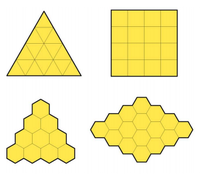

- Cameron Browne & Frederic Maire (2010). Evolutionary game design. IEEE Transactions on Computational Intelligence and AI in Games 2(1): 1-16.

Browne & Maire's system, Ludi, proposes a much more ambitious evolutionary-design framework. Criteria other than balance are introduced, including an aesthetic theory of board-game design. In addition, the search space is much larger than Hom & Mark's, constructed via recombination of game elements (ludemes). In addition, there is an explicit notion of game distance to distinguish game variants from new games. (The paper also includes quite a bit of interesting design discussion and justification that I don't do justice in this brief summary.)

Second cluster of systems: graphical logics

Another significant chunk of work generates games roughly in the style of classic arcade games. The basic components are: 2d elements that might move, of several kinds, one of which is typically controlled by a single player. There are usually collisions, which might have various effects, and some kind of points system or goal.

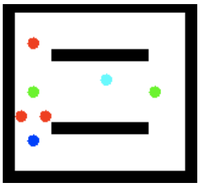

- Julian Togelius & Jürgen Schmidhuber (2008). An experiment in automatic game design. In Proceedings of the 2008 IEEE Conference on Computational Intelligence and Games, pp. 111-118.

In graphical-logic games, it's not as clear what "balance" means, and there isn't the same worked-out theory of aesthetics and game design. Togelius and Schmidhuber propose the following criterion as a general and domain-agnostic one: "a game is fun if it is learnable but not trivial". They justify this partly by reference to theories of gameplay fun, and curiosity. (I wonder if it might also be the one-player analog of "balance"?) The concrete operationalization of that principle is that a game is good if a controller can evolve to beat it, but not too easily. Gameplay rules themselves are also evolved, and controllers are evolved to evaluate each set of rules.

- Adam M. Smith & Michael Mateas (2010). Variations Forever: Flexibly generating rulesets from a sculptable design space of mini-games. In Proceedings of the 2010 IEEE Conference on Computational Intelligence and Games, pp. 273-280.

Variations Forever focuses on graphical-logic games as well, but on declaratively defining spaces of games. The goal is to minimize the up-front ontological commitments, and provide a way to include or exclude games from a generative space based on specific criteria. The aim is to provide tools for defining and "sculpting" generative spaces, rather than optimizing within predefined spaces. The representational/reasoning framework they use is answer-set programming.

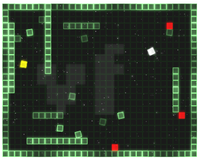

- Michael Cook & Simon Colton (2011). Multi-faceted evolution of simple arcade games. In Proceedings of the 2011 IEEE Conference on Computational Intelligence and Games, pp. 289-296.

In one version of their larger project Angelina, Cook & Colton propose to blur the boundary between PCG and game-mechanics generation, by integrating concerns such as level-generation and NPC generation into the same system. The hope is to find synergy as elements play off each other, while managing complexity with modularity and a notion of internal-to-a-module and external-to-a-module fitness criteria.

Generating things other than mechanics

I've proposed factoring game design into four areas: abstract mechanics, concrete representations, thematic elements, and input mappings. So far the systems discussed generate mechanics, some more abstract than others. What about the other components?

- Jon Orwant (2000). EGGG: Automated programming for game generation. IBM Systems Journal 39(3-4): 782-794.

Orwant wrote a system that targets the mapping from an abstract game (state, events, etc.) into a specific layout of visual elements that makes the game playable. It even generates a manual explaining how to play the game. Whether it's a game "generator" is an interesting question. One interpretation is that it's more of a game "compiler", since it lays out specific genres of games according to a set of rules, although these are extensible. It has connections to the software-engineering field of automatic programming, essentially defining a domain-specific programming language for game design.

- Mark J. Nelson and Michael Mateas (2007). Towards automated game design. In AI*IA 2007: Artificial Intelligence and Human-Oriented Computing, Springer Lecture Notes in Computer Science 4733, pp. 626–637.

- Mark J. Nelson and Michael Mateas (2008). An interactive game-design assistant. In Proceedings of the 2008 International Conference on Intelligent User Interfaces, pp. 90–98.

Mateas and I look into what relationship there is between mechanics and the "skin", i.e. the choice of sprites and thematic elements. By looking at the simple space of WarioWare-style games, we propose that mechanics define nouns and verbs in the gameplay world, and skinning has certain implied constraints on what mappings make sense to those nouns/verbs. We implement an auto-skinning system based on editable constraint spaces using a commonsense-reasoning knowledge base, ConceptNet.

- Mike Treanor, Bryan Blackford, Michael Mateas & Ian Bogost (2012). Game-O-Matic: Generating videogames that represent ideas. In Proceedings of the 2012 Workshop on Procedural Content Generation.

- Mike Treanor, Bobby Schweizer, Ian Bogost & Michael Mateas (2012). The micro-rhetorics of Game-O-Matic. In Proceedings of the 2012 Conference on the Foundations of Digital Games, pp. 18-25.

The Game-O-Matic project asks whether we can generate games that are "about" something. The user supplies a concept map of what they want a game about, and the system has a codified set of strategies in simple, arcade-style games that it can use to map the user's concepts to a videogame. (I don't attempt to summarize here the actual approach, given in the above papers.)

The remaining area in my factorization, input mappings, is a very interesting area that doesn't appear to have had any game-generation work done on it yet. Systems typically take input methods as a given, e.g. the presence of several control methods and a "player" entity in graphical-logic games, or an ontology of movement types in board games. But what about, for example, the difference between controlling a game with a joystick, keyboard, or Kinect? Or even leaving aside physical control, where the player interacts in the game world: keeping identical mechanics but having the player control a different entity in the world, or controlling it via a different method, can produce very different games.

Connections to other areas

Game generation has, I would propose, close connections to two other areas of research. Game-mechanic encodings are effectively an operationalized game ontology, so we might look at what game-studies researchers say about ontologies, especially those who take a more formal approach that might be closer to practical use in computational systems. In addition, an emerging area of design-support systems also makes use of formalized game-mechanic encodings, but to produce tools for game designers, rather than game-generation systems.

- Staffan Björk & Jussi Holopainen (2004). Patterns in Game Design. Charles River Media.

- Cameron Browne (2005). Connection Games: Variations on a Theme. A.K. Peters.

- José P. Zagal, Michael Mateas, Clara Fernández-Vara, Brian Hochhalter & Nolan Lichti (2005). Towards an ontological language for game analysis. In Proceedings of the 2005 Conference of the Digital Games Research Association.

- Jesper Juul (2007). Swap adjacent gems to make sets of three: A history of matching tile games. Artifact Journal 2.

- José P. Zagal, Clara Fernández-Vara & Michael Mateas (2008). Rounds, levels, and waves: The early evolution of gameplay segmentation. Games and Culture 3(2): 175-198.

These are examples of some of the more formal approaches to game ontology in game studies (though not formalized in the computer-science sense) which analyze game elements. Björk/Holopainen and Zagal et al. (2005) aim to produce methods of organizing game-design elements systematically. Browne looks specifically at one class of games, and variations within that space; he's himself, since then, adapted and formalized some of those insights in his work on the Ludi system. Pell (cited earlier) also draws on theories of board-games variation, mentioning the early-20th-century work of T.R. Dawson on chess variants as a source for some of his domain formalization. Finally, among this brief selection, both Juul and Zagal et al. (2008) trace elements in specific genres using a historical/genealogical method, looking at how certain common patterns are borrowed and mutated between games. That's viewable as akin to doing a history of the "wetware" implementation of evolutionary game-design.

- Joris Dormans (2009). Machinations: Elemental feedback structures for game design. In Proceedings of GAMEON-NA 2009, pp. 33-40.

- Adam M. Smith, Mark J. Nelson & Michael Mateas (2009). Computational support for play testing game sketches. In Proceedings of the 5th Artificial Intelligence and Interactive Digital Entertainment Conference, pp. 167-172.

These systems formalize game mechanics to give feedback on designs in progress to game designers prototyping their systems. Dormans uses a petri-net based graphical formalization of mechanics, while Smith et al. use a temporal logic based on the event calculus. Dormans mainly uses simulation to analyze the mechanics, while Smith et al. use theorem-proving in answer-set programming.

Modular and extensible ontologies

A research challenge I'd like to propose: can we build modular and extensible ontologies, to encode knowledge across many genres, perhaps capturing existing genres and elements, and allowing for additions and reconfiguration? Existing work formalizing specific domains (such as board games) can serve as a basis. There are many directions that it could go from there. Perhaps the Cyc of game design? Or else more like a formalized version of the Game Ontology Project or even TVTropes?

- Doug Church (1999). Formal abstract design tools. Gamasutra.

- Mark J. Nelson & Michael Mateas (2008). Recombinable game mechanics for automated design support. In Proceedings of the 4th Artificial Intelligence and Interactive Digital Entertainment Conference, pp. 84-89.

- Michael Mateas & Noah Wardrip-Fruin (2009). Defining operational logics. In Proceedings of the 2009 Conference of the Digital Games Research Association.

In the game industry, a proposal that's received much discussion is a 1999 article by Doug Church (System Shock, Thief, etc.), who argued for formalizing the design language of games into a reusable vocabulary. In academia, there are some existing concepts we could draw on. Browne & Maire (cited above) discuss the concept of ludemes, which is one possible basis. Mateas & Wardrip-Fruin define another possible basis, operational logics, which are domains with bundles of elements, dynamics, and representational techniques; the graphical logics mentioned earlier (Wardrip-Fruin's term) are one example.

My own work on recombinable game mechanics proposes a sort of quick-and-dirty operationalization of a similar concept, by defining vocabularies that form a background language of elements and events, and mechanics that are defined by reference to those vocabularies. For example, an inventory system is a vocabulary, bringing in the notion of "inventory" and "items" and "picking up" and similar nouns and verbs. Specific mechanics, on the other hand, such as "colliding with an item on the world map picks it up, unless inventory is full" are defined in reference to one or more vocabularies. In this example, the mechanic references both an item-system vocabulary and a world-map-movement vocabulary, with this mechanic providing a connection between them. This vocabulary/mechanics split provides one approach to extensible domains, though it is, to be sure, still a fairly flat kind of modularity.